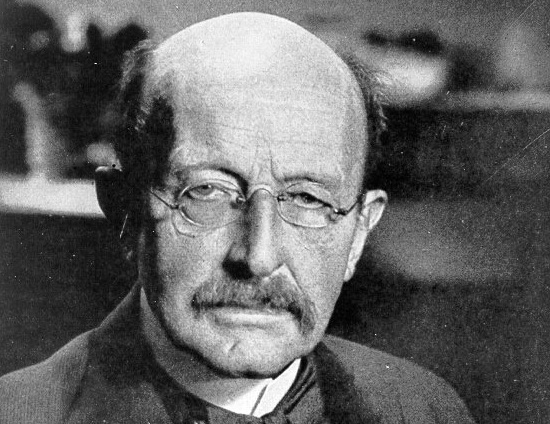

Max Planck and the origins of quantum theory

Max Planck (1858–1947).

The greatest crisis physics has ever known came to a head over afternoon tea on Sunday, 7 October 1900, at the home of Max Planck in Berlin. The son of a professor of jurisprudence, Planck had held the chair in theoretical physics at the University of Berlin since 1889, specializing in thermodynamics – the science of heat change and energy conservation. He could easily have been sidetracked into a different career. At the age of 16, having just entered the University of Munich, he was told by Philipp von Jolly, a professor there, that the task of physics was more or less complete. The main theories were in place, all the great discoveries had been made, and only a few minor details needed filling in here and there by generations to come. It was a view, disastrously wrong but widely held at the time, fueled by technological triumphs and the seemingly all-pervasive power of Newton's mechanics and Maxwell's electromagnetic theory.

Planck later recalled why he persisted with physics: "The outside world is something independent from man, something absolute, and the quest for the laws which apply to this absolute appeared to me as the most sublime scientific pursuit in life." The first instance of an absolute in nature that struck Planck deeply, even as a gymnasium (German high school) student, was the law of conservation of energy – the first law of thermodynamics. This says that you can't make or destroy energy, only change it from one form to another; or, to put it another way, the total energy of a closed system (one that energy can't enter or leave) always stays the same.

The second law

Later, Planck became equally convinced (but mistakenly, it turned out) that the second law of thermodynamics is an absolute. The second law, which includes the statement that you can't turn heat into mechanical work with 100% efficiency, crops up today in science in all sorts of different guises. It forms an important bridge between physics and information. When the second law was first introduced, in the 1860s by Rudolf Clausius in Germany and William Thomson (Lord Kelvin) in Britain, however, it was in a form that came to be known as the entropy law. Physicists and engineers of this period were obsessed with steam engines, and for good reason. Steam engines literally powered the Industrial Revolution, so making them work better and more efficiently was of vital economic concern. An important early theoretical study of heat engines had been done in the 1820s by the French physicist Sadi Carnot, who showed that what drives a steam engine is the fall or flow of heat from higher to lower temperatures, like the fall of a stream of water that turns a mill wheel.

Clausius and Thomson took this concept and generalized it. Their key insight was that the world is inherently active, and that whenever an energy distribution is out of kilter, a potential or thermodynamic "force" is set up that nature immediately moves to quell or minimize. All changes in the real world can then be seen as consequences of this tendency. In the case of a steam engine, pistons go up and down, a crank turns, one kind of work is turned into another, but this is always at the cost of a certain amount of waste heat. Some coherent work (the atoms of the piston all moving in the same order) turns into incoherent heat (hot atoms bouncing around at random). You can't throw the process into reverse, any more than you can make a broken glass jump off the floor and reassemble itself on a table again. You can't make an engine that will run forever; the reason the engine runs in the first place is because the process is fundamentally unbalanced. (Would-be inventors of perpetual motion machines take note.)

Whereas the first law of thermodynamics deals with things that stay the same, or in which there's no distinction between past, present, and future, the second law gives a motivation for change in the world and a reason why time seems to have a definite, preferred direction. Time moves relentlessly along the path toward cosmic dullness. Kelvin spoke in doom-mongering terms of the eventual heat death of the universe when, in the far future, there will be no energy potentials left and therefore no possibility of further, meaningful change.

Entropy and time's arrow

Clausius coined the term entropy in 1865 to refer to the potential that's dissipated or lost whenever a natural process takes place. The second law, in its original form, states that the world acts spontaneously to minimize potentials, or, equivalently, to maximize entropy. Time's arrow points in the direction the second law operates – toward the inevitable rise of entropy and the loss of useful thermodynamic energy.

For Max Planck, the second law and the concept of entropy held an irresistible attraction – the prospect of an ultimate truth from which all other aspects of the external world could be understood. These ideas formed the subject of his doctoral dissertation at Munich and lay at the core of almost all his work until about 1905. It was a fascination that impelled him toward the discovery for which he became famous. Yet, ironically, this discovery, and the revolution it sparked, eventually called into question the very separation between humankind and the world, between subject and object, for which Planck held physics in such high esteem.

Planck wasn't a radical or a subversive in any way; he didn't swim instinctively against the tide of orthodoxy. On the contrary, having came from a long line of distinguished and very respectable clergymen, statesmen, and lawyers, he tended to be quite staid in his thinking. At the same time, he also had a kind of aristocratic attitude to physics that led him to focus only on big, basic issues and to be rather dismissive of ideas that were more mundane and applied. His unswerving belief in the absoluteness of the entropy version of the second law, which he shared with few others, left him in a small minority in the scientific community. It also, curiously, led him to doubt the existence of atoms, and that was another irony given how events turned out.

Like other scientists of his day, Planck was intrigued by why the universe seemed to run in only one direction, why time had an arrow, why nature was apparently irreversible and always running down. He was convinced that this cosmic one-way street could be understood on the basis of the absolute validity of the entropy law. But here he was out of step with most of his contemporaries. The last decade of the 19th century saw most physicists falling into line behind an interpretation of the second law that was the brainchild of the Austrian physicist Ludwig Boltzmann. It was while Boltzmann was coming of age and completing his studies at the University of Vienna, that Clausius and Thomson were hatching the second law, and Clausius was defining entropy and showing how the properties of gases could be explained in terms of large numbers of tiny particles dashing around and bumping into one another and the walls of their container (the so-called kinetic theory of gases). To these bold new ideas, in the 1870s, Boltzmann added a statistical flavor. Entropy, for example, he saw as a collective result of molecular motions. Given a huge number of molecules flying here and there, it's overwhelmingly likely that any organized starting arrangement will become more and more disorganized with time. Entropy will rise, with almost but not total certainty. So, although the second law remains valid according to this view, it's only in a probabilistic sense.

Some people were upset by Boltzmann's theory because it just assumed from the outset, without any attempt at proof, that atoms and molecules exist. One of its biggest critics was Wilhelm Ostwald, the father of physical chemistry (and Nobel Prize winner in 1909), who wanted to rid physics of the notion of atoms and base it purely on energy – a quantity that could be observed. Like other logical positivists (people who accept only what can be observed directly and who discount speculation), Ostwald stubbornly refused to believe in anything he couldn't see or measure. (Boltzmann eventually killed himself because of depression brought on by such persistent attacks on his views.) Planck wasn't a logical positivist. Far from it: like Boltzmann, he was a realist who time and again attacked Ostwald and the other positivists for their insistence on pure experience. Yet he also rejected Boltzmann's statistical version of thermodynamics because it cast doubt on the absolute truth of his cherished second law. It was this rejection, based more on a physical rather than a philosophical argument, that led to him to question the reality of atoms. In fact, as early as 1882, Planck decided that the atomic model of matter didn't jibe with the law of entropy. "There will be a fight between these two hypotheses that will take the life of one of them," he predicted. And he was pretty sure he knew which was going to lose out: "[I]n spite of the great successes of the atomistic theory in the past, we will finally have to give it up and to decide in favor of the assumption of continuous matter."

By the 1890s Planck had mellowed a bit in his stance against atomism – he'd come to realize the power of the hypothesis, even if he didn't like it – yet he remained adamantly opposed to Boltzmann's statistical theory. He was determined to prove that time's arrow and the irreversibility of the world stemmed not from the whim of molecular games of chance but from what he saw as the bedrock of the entropy law. And so, as the century drew to a close, Planck turned to a phenomenon that led him, really by accident, to change the face of physics.

Blackbody radiation

While a student at Berlin from 1877 to 1878, Planck had been taught by Gustav Kirchhoff who, among other things, laid down some rules about how electrical circuits work (now known as Kirchhoff's laws) and studied the spectra of light given off by hot substances. In 1859, Kirchoff proved an important theorem about ideal objects that he called blackbodies. A blackbody is something that soaks up every scrap of energy that falls upon it and reflects nothing – hence its name. It's a slightly confusing name, however, because a blackbody isn't just a perfect absorber: it's a perfect emitter as well. In one form or another, a blackbody gives back out every bit of energy that it takes in. If it's hot enough to give off visible light then it won't be black at all. It might glow red, orange, or even white. Stars, for example, despite the obvious fact that they're not black (unless they're black holes!), act very nearly as blackbodies; so, too, do furnaces and kilns because of their small openings that allow radiation to escape only after it's been absorbed and reemitted countless times by the interior walls. Kirchoff proved that the amount of energy a blackbody radiates from each square cm of its surface hinges on just two factors: the frequency of the radiation and the temperature of the blackbody. He challenged other physicists to figure out the exact nature of this dependency: What formula accurately tells how much energy a blackbody emits at a given temperature and frequency?

Experiments were carried out, using apparatus that behaved almost like a blackbody (a hot hollow cavity with a small opening), and equations were devised to try to match theory to observation. On the experimental side, the results showed that if you plotted the amount of radiation given off by a blackbody with frequency, it rose gently at low frequencies (long wavelengths), then climbed steeply to a peak, before falling away less precipitately on the high frequency (short wavelength) side. The peak drifted steadily to higher frequencies as the temperature of a blackbody rose, like the ridge of a barchan sand dune marching in the desert wind. For example, a warm blackbody might glow "brightest" in the (invisible) infrared and be almost completely dark in the visible part of the spectrum, whereas a blackbody at several thousand degrees radiates the bulk of its energy at frequencies we can see. Scientists knew that this was how perfect blackbodies behaved because their laboratory data, based on apparatuses that were nearly perfect blackbodies, told them so. The sticking point was to find a formula, rooted in known physics, that matched these experimental curves across the whole frequency range. Planck believed that such a formula might provide the link between irreversibility and the absolute nature of entropy: his scientific holy grail.

Matters seemed to be moving in a promising direction when, in 1896, Wilhelm Wien, of the Physikalisch-Technische Reichsanstalt (PTR) in Berlin, gave one of the strongest replies to the Kirchoff challenge. "Wien's law" agreed well with the experimental data that had been gathered up to that point and it drew the attention of Planck who time and again, tried to reach Wien's formula using the second law of thermodynamics as a springboard. It wasn't that Planck didn't have faith in the formula that Wien had found. He did. But he wasn't interested in a law that was merely empirically correct, or an equation that had been tailored to fit experimental results. He wanted to build Wien's law up from pure theory and thereby, hopefully, justify the entropy law. In 1899, Planck thought he'd succeeded. By assuming that blackbody radiation is produced by lots of little oscillators like miniature antennae on the surface of the blackbody, he found a mathematical expression for the entropy of these oscillators from which Wien's law followed.

Planck's formula

Then came a hammer blow. Several of Wien's colleagues at the PTR – Otto Lummer, Ernst Pringsheim, Ferdinand Kurkbaum, and Heinrich Rubens – did a series of careful tests that undermined the formula. By the autumn of 1900, it was clear that Wien's law broke down at lower frequencies – in the far infrared (waves longer than heat waves) and beyond. On that fateful afternoon of 7 October, Herr Doktor Rubens and his wife visited the Planck home and, inevitably, the conversation turned to the latest results from the lab. Rubens gave Planck the bad news about Wien's law.

After his guests left, Planck set to thinking where the problem might lie. He knew how the blackbody formula, first sought by Kirchoff four decades earlier, had to look mathematically at the high-frequency end of the spectrum given that Wien's law seemed to work well in this region. And he knew, from the experimental results, how a blackbody was supposed to behave in the low-frequency regime. So, he took the step of putting these relationships together in the simplest possible way. It was a guess, no more – a "lucky intuition," as Planck put it – but it turned out to be absolutely dead on. Between tea and supper, Planck had the formula in his hands that told how the energy of blackbody radiation is related to frequency. He let Rubens know by postcard the same evening and announced his formula to the world at a meeting of the German Physical Society on 19 October.

One of the myths of physics, which is echoed time and again in books, both academic and popular, and in college courses, even today, is that Planck's blackbody formula had something to do with what's called the "ultraviolet catastrophe." It didn't. This business of the ultraviolet catastrophe is a bit of a red herring (to mix colorful metaphors), worthwhile mentioning here only to set the record straight. In June 1900, the eminent English physicist Lord Rayleigh (plain John Strutt before he became a baron) pointed out that if you assume something known as the equipartition of energy, which has to do with how energy is distributed among a bunch of molecules, then classical mechanics blows up in the face of blackbody radiation. The amount of energy a blackbody emits just shoots off the scale at the high frequency end – utterly in conflict with the experimental data. Five years later, Rayleigh and his fellow countryman James Jeans came up with a formula, afterward known as the Rayleigh-Jeans law, that shows exactly how blackbody energy is tied to frequency if you buy into the equipartition of energy. The name "ultraviolet catastrophe," inspired by the hopelessly wrong prediction at high frequencies, wasn't coined until 1911 by the Austrian physicist Paul Ehrenfest. None of this had any bearing on Planck's blackbody work. Planck hadn't heard of Rayleigh's June 1900 comments when he came up with his new blackbody formula in October In any case, it wouldn't have mattered: Planck didn't accept the equipartition theorem as fundamental. So the "ultraviolet catastrophe," which sounds very dramatic and as if it were a turning point in physics, doesn't really play a part in the revolution that Planck ignited.

Planck had his formula in October 1900 and it was immediately hailed as a major breakthrough. But the forty-two-year-old theorist, methodical by nature and rigorous in his science, wasn't satisfied simply by having the right equation. He knew that his formula rested on little more than an inspired guess. It was vital to him to be able to figure it out, as he done with Wien's law – logically, systematically, from scratch. So began, as Planck recalled, "a few weeks of the most strenuous work of my life." To achieve his fundamental derivation, Planck had to make what was, for him, a major concession. He had to yield ground to some of the work that Boltzmann had done. At the same time, he wasn't prepared to give up his belief that the entropy law was absolute, so he reinterpreted Boltzmann's theory in his own nonprobabilistic way. This was a crucial step, and it led him to an equation that has since become known as the Boltzmann equation, which ties entropy to molecular disorder.

We're all familiar with how, at the everyday level, things tend to get more disorganized over time. The contents of houses, especially of teenagers' rooms, become more and more randomized unless energy is injected from outside the system (parental involvement) to tidy them up. What Planck found was a precise relationship between entropy and the level of disorganization in the microscopic realm.

Energy quanta

To put a value on molecular disorder, Planck had to be able to add up the number of ways a given amount of energy can be spread among a set of blackbody oscillators; and it was at this juncture that he had his great insight. He brought in the idea of what he called energy elements – little snippets of energy into which the total energy of the blackbody had to be divided in order to make the formulation work. By late 1900, Planck had built his new radiation law from the ground up, having made the extraordinary assumption that energy comes in tiny, indivisible lumps. In the paper he wrote, presented to the German Physical Society on 14 December, he talked about energy "as made up of a completely determinate number of finite parts" and introduced a new constant of nature, h, with the fantastically small value of about 6.6 × 10–27 erg second. This constant, now known as Planck's constant, connects the size of a particular energy element to the frequency of the oscillators associated with that element.

Something new and extraordinary had happened in physics, even if nobody immediately caught on to the fact. For the first time, someone had hinted that energy isn't continuous. It can't, as every scientist had blithely assumed up to that point, be traded in arbitrarily small amounts. Energy comes in indivisible bits. Planck had shown that energy, like matter, can't be chopped up indefinitely. (The irony, of course, is that Planck still doubted the existence of atoms!) It's always transacted in tiny parcels, or quanta. And so Planck, who was anything but a maverick or an iconoclast, began the transformation of our view of nature and the birth of quantum theory.

It was to be a slow delivery. Physicists, especially Planck (the "reluctant revolutionary" as one historian called him), didn't quite know what to make of this bizarre suggestion of the graininess of energy. In truth, compared with all the attention given to the new radiation law, this weird quantization business at its heart was pretty much overlooked. Planck certainly didn't pay it much heed. He said he was driven to it in an "act of despair" and that "a theoretical interpretation had to be found at any price." To him, it was hardly more than a mathematical trick, a theorist's sleight of hand. As he explained in a letter written in 1931, the introduction of energy quanta was "a purely formal assumption and I really did not give it much thought except that no matter what the cost, I must bring about a positive end." Far more significant to him than the strange quantum discontinuity (whatever it meant) was the impressive accuracy of his new radiation law and the new basic constant it contained. This lack of interest in the strange energy elements has led some historians to question whether Planck really ought to be considered the founder of quantum theory. Certainly, he didn't see his work at the time as representing any kind of threat to classical mechanics or electrodynamics. On the other hand, he did win the 1918 Nobel Prize in Physics for his "discovery of energy quanta." Perhaps it would be best to say that Planck lit the spark and then withdrew. At any rate, the reality of energy quanta was definitely put on a firm footing a few years later, in 1905 – by the greatest genius of the age, Albert Einstein.

For more about the history and physics of quantum theory, see Einstein and the photoelectric effect.